A few days ago, I shared a post about achieving face detection and embedding extraction (if you haven’t read that, I highly recommend giving it a look first!). Today, I’m excited to delve deeper into the fascinating world of how face authentication using AI. Specifically, I’ll demonstrate how extracting embeddings from a series of faces can be utilized by a simple Support Vector Machine (SVM) model to create a robust face authenticator.

This approach leverages the power of AI to enhance security measures, making the authentication process not only more secure but also more efficient and user-friendly. Join me as we explore the steps to implement this technology in Python, transforming theoretical knowledge into a practical tool that can be applied in real-world scenarios.

Although numerous blogs discuss recognizing faces from a database, I aim to offer a fresh perspective by introducing a script that trains a model to distinguish a single individual. This approach transforms the task into a binary classification problem focused on identifying one person. Let’s delve into the details of this technique!

Table of contents

Requirements

I dont usually add a “requirements” section to my posts, but I consider this post to be slightly more challenging technically speaking as it requires for some work of your own. I will go deeper into the details in a moment, but this process requires for you to extract the embeddings of a series of images both from a dataset and your own set of images you will need to use for training your own model. Therefore, consider you will need to:

- Download any labelled and publicly available dataset of faces like Faces on the Wild or VGGFace2. This post will use all the classes contained within the Kaggle version of VGGFace2 (arround 4605 different people), but I have had good results with as few as 10 classes from this same dataset for the training process.

- Make sure to have a series of images of your own face or someone’s face that has granted you the permission to use their facers for testing the authentication model. There is no “magic number” in how much images you need, but I have found that at least 10 images per class will provide great results.

- You will need to dump/extract the embedding of all the classes contained within your selected dataset, as well as the embeddings of all the fotos you have gathered for training the model. Make sure to follow my tutorial on face detection and embedding extraction to know how to do it!

Why Use SVMs for Face Authentication?

In the quest to harness the potential of Artificial Intelligence (AI) for enhancing security measures, particularly in the realm of face authentication, the choice of algorithm plays a pivotal role. Among the myriad of machine learning algorithms available, Support Vector Machines (SVMs) stand out as a particularly effective choice for this application. Although I have already wrote some other posts expecially adressing SVMs and their benefits, the main pros and cons in this particular case are:

Pros

- Exceptional Performance in High-Dimensional Spaces: Face authentication tasks involve analyzing complex, high-dimensional facial data. SVMs are renowned for their ability to efficiently manage high-dimensional spaces, identifying the optimal separating hyperplane with maximum margin. This capability is essential for accurately classifying facial features into authenticated and non-authenticated categories.

- Strong Guard Against Overfitting: SVMs come with built-in mechanisms to combat overfitting, a common challenge where models perform well on training data but poorly on new data. The regularization parameter in SVMs helps in achieving a balance between maximizing the margin and minimizing the classification error, ensuring the model’s robustness and generalizability.

- Flexibility with the Kernel Trick: The kernel trick is a powerful feature of SVMs that allows them to handle non-linear data by mapping input features into higher-dimensional spaces. This adaptability is particularly valuable in face authentication, where facial features can exhibit complex, non-linear relationships.

- Optimal for Binary Classification Tasks: As I shared before, I was interested in showing how to recognize 1 single user per model due to its convenience in face authentication systems. For that problem, face authentication becomes a sort of a binary classification problem, determining whether a face matches a known identity. SVMs are inherently designed for binary classification, making them especially efficient for distinguishing between authenticated and non-authenticated faces.

Cons (and some limitations)

- Computational Resources for Large Datasets: Training SVMs on very large datasets can be computationally intensive and time-consuming. We will use a limited dataset for the training, but in other scenarios the complexity of training an SVM scales approximately quadratically with the size of the dataset, which may pose challenges in scenarios with vast amounts of facial data.

- Sensitivity to Parameter Selection: The performance of SVMs can be highly sensitive to the choice of parameters, such as the regularization parameter and the kernel parameters. Incorrect parameter settings can lead to suboptimal performance, requiring careful tuning and validation.

- Binary Nature: Although SVMs are excellent for binary classification problems, extending them to multi-class scenarios—such as identifying multiple individuals in a face authentication system—can require additional considerations, such as implementing one-vs-one or one-vs-all strategies.

In summary, while SVMs present a powerful tool for AI-powered face authentication with their high-dimensional performance, overfitting protection, and flexibility, it’s important to consider their computational demands, sensitivity to parameter settings, and binary nature. Balancing these pros and cons is key to effectively implementing SVMs in face authentication systems. Also, the script that I share with you today is a mere demo of the potential of binary classifiers for this authentication method, but you will need to consider the perks of your own architecture and which model and approach fits the best for your use case. Enought with the introdcution, lets check the code itself.

Face authentication: How it works?

The algorithm I am sharing today employs face embeddings, a form of deep learning representation that encodes facial features into a compact numerical form. These embeddings capture the unique attributes of a face, such as the distance between eyes, nose shape, and jawline contour, transforming these complex patterns into a high-dimensional space where they can be efficiently compared and analyzed.

The process starts with the extraction of face embeddings from a dataset. In my personal case I have chosen the VGGFace2 one, which I obtained from Kaggle because it is farily balanced and has a big enought amount of classes we can use. This dataset comprises images of faces, each labeled according to the identity of the person. The extraction of embeddings is a critical step, as it translates raw facial images into a form that the SVM model can learn from. This is typically achieved using a pre-trained neural network designed for face recognition tasks.

As stated before, you will need to dump the embeddings of the dataset and your own images yourself, but I want you to keep in mind that I have wrote an entire article on how you can extract them yourself for you to use it. You might want to give that one a look before going deeper into this article!

Algorithmic Approach

- Embedding extraction: There are several ways to achieve facial authentication. For example, you could use a special type of Deep Neural Networks called “Convolusional Neural Networks (CNNs for short) that will extract the information from the images you input into them automatically.

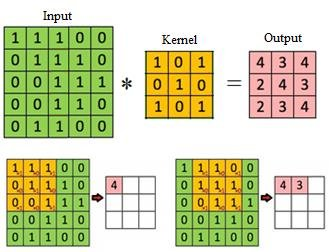

That is done by using a process called convolution where a certain kernel is used to filter the original image and obtain a numeric representation of it afterwards (check Figure 1 for reference). As we are not using CNNs, we need to figure out a way of extracting matematical representations of the faces we will use for training the model of our selection, in this case, a SVM. That is where the concept of embedding becomes extremely important and, therefore, esential for proceding with the training of the model itsel. Therefore, the first step (as stated several times before) is to extract the embedding of several images representing multiple faces that we will use to tell appart that one single face we need to authenticate after training our model. - Training the SVM Model: With embeddings in hand, the algorithm proceeds to train the SVM model. SVM is a supervised learning model used for binary classification tasks. It works by finding the hyperplane that best separates the embeddings of different classes (in this case, authorized vs. unauthorized users) in the high-dimensional space. The SVM model is trained with a sigmoid kernel, which is particularly suited for binary classification problems, enabling the model to handle the non-linear distribution of face embeddings.

- Model Training Dynamics: During training, the model learns to associate specific embeddings (i.e., the numerical representations of facial features) with their corresponding class labels (authorized or unauthorized). The goal is to achieve a separation with maximum margin between the two classes, thereby minimizing the risk of misclassification.

- Prediction and Authentication: Once trained, the SVM model is used to authenticate faces. For a given face, its embedding is extracted and fed into the model. The model then predicts the probability of the face belonging to each class. Based on these probabilities, the system determines whether the face is authorized (known) or unauthorized (unknown), thereby granting or denying access accordingly.

Figure 1: A very basic graphical representation of how a convolutional neural network will “see” the data comming from an image. On the simplest of the cases, the RGB values of the image will be simplified by a matrix called ‘kernel’ to then be fed into a neural network. Check the source of this image (and a deeper explanation) here!

Face authentication using AI: The code

I hate to be “that author”, but in this case it is particularly important that you have followed my tutorial on embedding extraction and face detection as I expect you to dump the embeddings of your selected dataset on your own due to copyright reasons. With the controller we developed on that entry, you could create a script that loops the files within the dataset and provides a single pickle with all the embeddings from several people that will be compared to the images you provided. Then, the SVM will learn how to tell you apart from the classes from the dataset itself.

Also, make sure you have the following packs installed and updated! Run these commands within an environment or container you previously build for this project:

pip install numpy

pip install scikit-learnAs we did before, I will provide the code for a controller that will be in charge of controlling the learning and prediction process of new faces. I am unaware if the Industry standards agree with this, but my approach is to train a single model to be able to keep it locally and use it to authenticate a single user at a time. However, any input on this is very much appreciated!

'''

Code by Rodrigo C. Curiel, 2024.

Check this and more snippets at www.curielrodrigo.com

'''

# Library imports

import sys

import numpy as np

import os

import pickle

from sklearn.svm import SVC

# Importing the controller for embedding dumping (you can find the code here -> https://curielrodrigo.com/face-detection-with-deep-neural-networks-the-ultimate-guide/)

from embedding_extraction_controller import EmbeddingExtractionController

# Main controller

class SvmFaceAuthenticationController:

def __init__(

self,

face_recognition_model_path : str,

face_recognition_config_path : str

):

# Set a local embedding extractor. Check my post entry on this controller for more details on how it works

self.embedding_extractor = EmbeddingExtractionController(

config_file=face_recognition_config_path,

model_file=face_recognition_model_path

)

# Pull a new face dataset and generate an SVM model to create predictions

def learn_new_face(

self,

path_for_new_face_dataset : str,

path_for_dumped_embeddings : str,

user_id : str,

path_output_for_model: str,

minimum_amount_of_faces_to_include_for_learning : int,

maximum_amount_of_faces_to_include_for_learning : int,

face_detection_confidence : float

):

# Load embeddings (make sure to dump your own embeddings and refer them adequately here)

loaded_embeddings = None

try:

with open(path_for_dumped_embeddings, 'rb') as file:

loaded_embeddings = pickle.load(file)

except:

raise BaseException("Dumped embeddings could not be loaded", path_for_dumped_embeddings)

# Make sure the target directory exists.

model_path = os.path.join(path_output_for_model, user_id + ".pickle")

if not os.path.exists(path_output_for_model):

os.makedirs(path_output_for_model)

else:

# Remove (if neccessary) the old model

if os.path.exists(model_path):

os.remove(model_path)

# Set a series of variables for training

x_train = []

y_train = []

# Extract the new face's embedding

new_face_embeddings = self.embedding_extractor.get_embeddings_from_directory(

directory=path_for_new_face_dataset,

maximum_amount_of_faces_per_class=maximum_amount_of_faces_to_include_for_learning,

minimum_amount_of_faces_per_class=minimum_amount_of_faces_to_include_for_learning,

face_detection_confidence=face_detection_confidence

)

# Add the items to the training dataset

for embedding in new_face_embeddings:

x_train.append(embedding)

y_train.append(0)

# Extract the control faces' embeddings

for _, class_embeddings in loaded_embeddings.items():

for embedding in class_embeddings:

# Push a new item for training/testing

x_train.append(embedding)

y_train.append(1)

# Create and train model.

# We will be using SKLearn's architecture for this!

model = SVC(kernel='sigmoid', probability=True, max_iter=-1)

model.fit(x_train, y_train) #fits model with training data

# Store the model at the required directory

pickle.dump(model, open(model_path, 'wb'))

# Perform a prediction for a new image

def predict_from_model (

self,

model_path : str,

image_path : str,

face_detection_confidence : float

):

# Load the model

face_authentication_model = pickle.load(open(model_path, 'rb'))

# Get the embedding from the requested image

embedding = None

try:

embedding = self.embedding_extractor.get_embeddings_from_image(

image_path=image_path,

face_detection_confidence=face_detection_confidence

)

except:

raise BaseException("No face could be detected on the provided image", image_path)

return face_authentication_model.predict_proba([np.asarray(embedding)])[0]Using the controller

As discussed before, the controller itself has a collection of methods that provide it with the capability of loading the dumped embeddings, dumping the embeddings of a new face to be learnt and train a SVM for classifying the new face from the others afterwards. Then, the model is stored in a way that allows us to access it later for authorizing someone from an image coming form the same person.

You have to consider this controller could be enhanced with higher security meassurements like antispoofing and liveness filters to make sure the image provided is not altered or malicious in any way, but that will have to be addressed later in other posts. For now, let’s see how you would use it!

'''

Code by Rodrigo C. Curiel, 2024.

Check this and more snippets at www.curielrodrigo.com

'''

# Import libraries

import sys

import os

import numpy as np

# Import the controller for SVM face authentication

from svm_face_authentication_controller import SvmFaceAuthenticationController

# ! VARIABLES START

# Model for facial detection and alingmnent (get them from my previous post at https://curielrodrigo.com/face-detection-with-deep-neural-networks-the-ultimate-guide/)

face_recognition_model_path='./res10_300x300_ssd_iter_140000.caffemodel'

face_recognition_config_path='./deploy.prototxt.txt'

# Indicate where you set the embeddings from your dataset

path_for_dumped_embeddings='./<your_own_path>.pickle'

# New person to identify's dataset

path_for_new_face_dataset='./<your_path_to_your_own_images>/'

# Where to put the trained model and how to call it

user_id='SOME_USER_ID'

path_output_for_model='./'

# Setup for training (as suggested, keep each class' images over 10 and under 1000)

minimum_amount_of_faces_to_include_for_learning=10

maximum_amount_of_faces_to_include_for_learning=1000

# You can change this, but the higher for training the better results you can get

face_detection_confidence = 0.9

# ! VARIABLES END

# Instance a controller

controller = SvmFaceAuthenticationController(

face_recognition_model_path,

face_recognition_config_path

)

# Train a model for the new face (and store it)

controller.learn_new_face(

path_for_new_face_dataset,

path_for_dumped_embeddings,

user_id,

path_output_for_model,

minimum_amount_of_faces_to_include_for_learning,

maximum_amount_of_faces_to_include_for_learning,

face_detection_confidence

)

model_path = './models/trained/svm/SOME_USER_ID.pickle'

directory_to_check = './<path_to_your_test_dataset>/'

# Perform the predictions

for file_name in os.listdir(directory_to_check):

try:

# Make the prediction

prediction = controller.predict_from_model(

model_path=model_path,

image_path=os.path.join(directory_to_check, file_name),

face_detection_confidence=face_detection_confidence

)

# Get the higher index

class_index = np.argmax(prediction)

authenticated = True if class_index == 0 else False

print ("---> " + file_name)

print (str(authenticated) + ' in ' + str(prediction[class_index]*100) + "%")

except:

print ("---> " + file_name)

print ("Could not be tested")

continueIn summary, this script is a complete workflow for adding a new person to a face authentication system and then using the trained model to authenticate faces from a test dataset. It is important for your to set the variables in the beggining as you require them to be on your own workspace as the location of the models, dumped embeddings and train/test images will be defined by your own file structure.

Conclusion

In conclusion, this SVM Face Authentication Controller illustrates an approach to face authentication using AI, leveraging a custom embedding extraction instead of relying on convolusion DNNs that tend to be computationally expensive. By meticulously handling datasets, training models, and predicting new instances with precision, today we learnt how to code a snippet that encapsulates a practical implementation of machine learning for security purposes. Don’t forget to explore more about face detection and authentication techniques on my website for comprehensive guides and additional code snippets!

One response

[…] usually write more about the technical part of a project (check this AI-based entry about face authentication, for example), but today I have decided to write an article that underscores the pros and cons of my 2 final […]